Jean-Michel DISCHLER

Professor

at the University of

Strasbourg. |

|

|

|

Jean-Michel Dischler holds the position of full Professor of Computer Science

at the University Strasbourg (unistra).

In terms of local duties, he was leading the department of Computer Science

at the Faculty for Mathematics and Computer Science, was vice-director of

the former LSIIT Lab until 2008, responsible for the master degree in Image

and Computing (IICI) until 2009, joint-director of the

3D Computer Graphics Group, IGG, in the new ICUBE lab, until 2018.

As for now, he is leading

the rendering and visualization research activities. He was a member of the

former INRIA project CALVI (Scientific computing and Visualisation)

that ended in 2010. Main research interests

include texture synthesis and rendering, 3D acquisition, high performance

graphics, direct volume rendering of voxel-data and procedural

modeling of natural phenomena. He served on a regular basis in a number

of program committees: Eurographics, Pacific Graphics, EGSR, EG Parallel Graphics and

Visualization, EG Symposium on natural phenomena, Eurovis, etc., and chaired the

Eurographics conference steering committee. He also

served as associate editor of journals like Computer Graphics Forum

and The Visual Computer Journal. He was co-founder and vice president of the French chapter

of Eurographics. He is a fellow of the Eurographics association and chaired the EG professional board for a decade. In 2021,

he became chairman of the Eurographics association. He organized

major international conferences, with venue in Strasbourg : EG’2014 , as well as the

Symposium on Rending (EGSR) and High-Performance Graphics (HPG) conferences in 2019.

|

Funded

research

projects and developpments

ASTex

a

C++ library for texture generation

Selected

research

results

|

|

Cyclostationary Gaussian noise: theory and synthesis, CGF Vol.40(2), Eurographics 2021

Nicolas Lutz, Basile Sauvage and Jean-Michel Dischler

Abstract.

Stationary Gaussian processes have been used for decades in the context of procedural noises to model and synthesize textures

with no spatial organization. In this paper we investigate cyclostationary Gaussian processes, whose statistics are repeated

periodically. It enables the modeling of noises having periodic spatial variations, which we call "cyclostationary Gaussian

noises". We adapt to the cyclostationary context several stationary noises along with their synthesis algorithms: spot noise,

Gabor noise, local random-phase noise, high-performance noise, and phasor noise. We exhibit real-time synthesis of a variety

of visual patterns having periodic spatial variations.

|

|

|

Semi-Procedural Textures using Point Process Texture Basis Functions, CGF Vol.39(4), EGSR 2020

P. Guehl, R. Allegre, J.‐M. Dischler, B. Benes, E. Galin

Abstract.

We introduce a novel semi‐procedural approach that avoids drawbacks

of procedural textures and leverages advantages of data‐driven texture

synthesis. We split synthesis in two parts: 1) structure synthesis,

based on a procedural parametric model and 2) color details synthesis,

being data‐driven. The procedural model consists of a generic Point

Process Texture Basis Function (PPTBF), which extends sparse convolution

noises by defining rich convolution kernels. They consist of a window

function multiplied with a correlated statistical mixture of Gabor

functions, both designed to encapsulate a large span of common spatial

stochastic structures, including cells, cracks, grains, scratches, spots,

stains, and waves. Parameters can be prescribed automatically by

supplying binary structure exemplars. As for noise‐based Gaussian

textures, the PPTBF is used as stand‐alone function, avoiding

classification tasks that occur when handling multiple procedural

assets. Because the PPTBF is based on a single set of parameters it

allows for continuous transitions between different visual structures

and an easy control over its visual characteristics. Color is

consistently synthesized from the exemplar using a multiscale parallel

texture synthesis by numbers, constrained by the PPTBF. The generated

textures are parametric, infinite and avoid repetition. The data‐driven

part is automatic and guarantees strong visual resemblance with inputs.

|

|

|

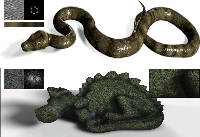

Procedural Physically based BRDF for Real-Time Rendering of Glints, CGF Vol.39(7), PG 2020

Xavier Chermain, Basile Sauvage, Jean-Michel Dischler, Carsten Dachsbacher

Abstract.

Physically based rendering of glittering surfaces is a challenging

problem in computer graphics. Several methods have proposed off-line

solutions, but none is dedicated to high-performance graphics. In this

work, we propose a novel physically based BRDF for real-time rendering

of glints. Our model can reproduce the appearance of sparkling materials

(rocks, rough plastics, glitter fabrics, etc.). Compared to the previous

real-time method [Zirr and al. 2016], which is not physically based,

our BRDF uses normalized NDFs and converges to the standard microfacet

BRDF [Cook and Torrance 1982] for a large number of microfacets. Our

method procedurally computes NDFs with hundreds of sharp lobes. It

relies on a dictionary of 1D marginal distributions: at each location

two of them are randomly picked and multiplied (to obtain a NDF),

rotated (to increase the variety), and scaled (to control standard

deviation/roughness). The dictionary is multiscale, does not depend on

roughness, and has a low memory footprint (less than 1 MiB).

|

|

|

Bi-Layer textures: a Model for Synthesis

and Deformation of Composite Textures, CGF Vol.36(4), EGSR 2017

Geoffrey Guingo, Basile Sauvage, Jean-Michel Dischler, Marie-Paule Cani

Abstract.

We propose a bi-layer representation for textures which is suitable for

on-the-fly synthesis of unbounded textures from an input exemplar. The goal

is to improve the variety of outputs while preserving plausible small-scale

details. The insight is that many natural textures can be decomposed into a

series of fine scale Gaussian patterns which have to be faithfully reproduced,

and some non-homogeneous, larger scale structure which can be deformed to add

variety. Our key contribution is a novel, bi-layer representation for such

textures. It includes a model for spatially-varying Gaussian noise, together

with a mechanism enabling synchronization with a structure layer. We propose

an automatic method to instantiate our bi-layer model from an input exemplar.

At the synthesis stage, the two layers are generated independently, synchronized

and added, preserving the consistency of details even when the structure layer

has been deformed to increase variety. We show on a variety of complex, real

textures, that our method reduces repetition artifacts while preserving a

coherent appearance.

|

|

|

Multi-Scale Label-Map Extraction for Texture Synthesis

, Siggraph

2016

|

|

|

Local random-phase noise for procedural texturing

, Siggraph

Asia 2014

|

|

|

On-the-Fly Multi-Scale Infinite Texturing from Example

, Siggraph

Asia 2013

|

|

|

Robust

Fitting

on Poorly Sampled Data

for Surface Light Field Rendering and Image Relighting

, CGF Vol. 32(6), 2013 |

|

|

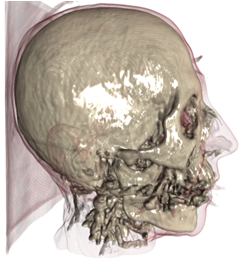

Pre-Integrated Volume Rendering with Non-Linear Gradient

Interpolation

, IEEE Vis 2010

|

Last update January 2022